TLOS-DIY XR

XR Instructional App - AR/2D (TLOS-DIY), motion graphics design

Tools: Unity, Vuforia, After Effects, Illustrator, Figma, C#

I developed the DIY Studio Guide XR App, an innovative solution to simplify the utilization of a campus DIY recording studio. Built using Unity and Vuforia, the app simplifies a 14-page technical instruction manual. Its goal is to provide the students and faculty with an easy, intuitive, and quick guide, enabling them to utilize the studio equipment efficiently without being bogged down by massive documentation.

The application uniquely offers users two interaction modes. A simple 2D interface opens into nine motion graphics videos covering each of the crucial steps in using the studio facility, along with short descriptive text and controlled through simple swipes or clicks. The option of going through an interactive Augmented Reality (AR) experience of the studio itself is also provided to users.

I used the Vuforia Creator App to make a LiDAR scan of the recording space and then to generate 3D mesh data that is imported into Unity game engine. This AR mode applies Vuforia’s Area Target API in the game engine, which utilizes the scanned data to recognize the studio environment and composite the instructional motion graphics over the corresponding physical equipment. Augmenting the AR experience, an intuitive navigation system guides the user; an on-screen arrow indicates the direction of the necessary equipment when off-camera, transforming into a target icon that locks onto the subject when recognized, confirming focus and displaying the related tutorial.

I programmed the user interaction and navigation logic with C# programming language. Visual design and storyboarding were done using Figma and Adobe Illustrator, and Adobe After Effects generated the engaging motion graphics. In total, this app dramatically enhances the user experience of the DIY recording studio, drastically reducing the learning curve and allowing users to utilize the facility confidently and successfully by replacing the document with interactive, intuitive instruction.

Building the DIY Studio Guide XR App

Phase 1: Problem Analysis & Content Strategy

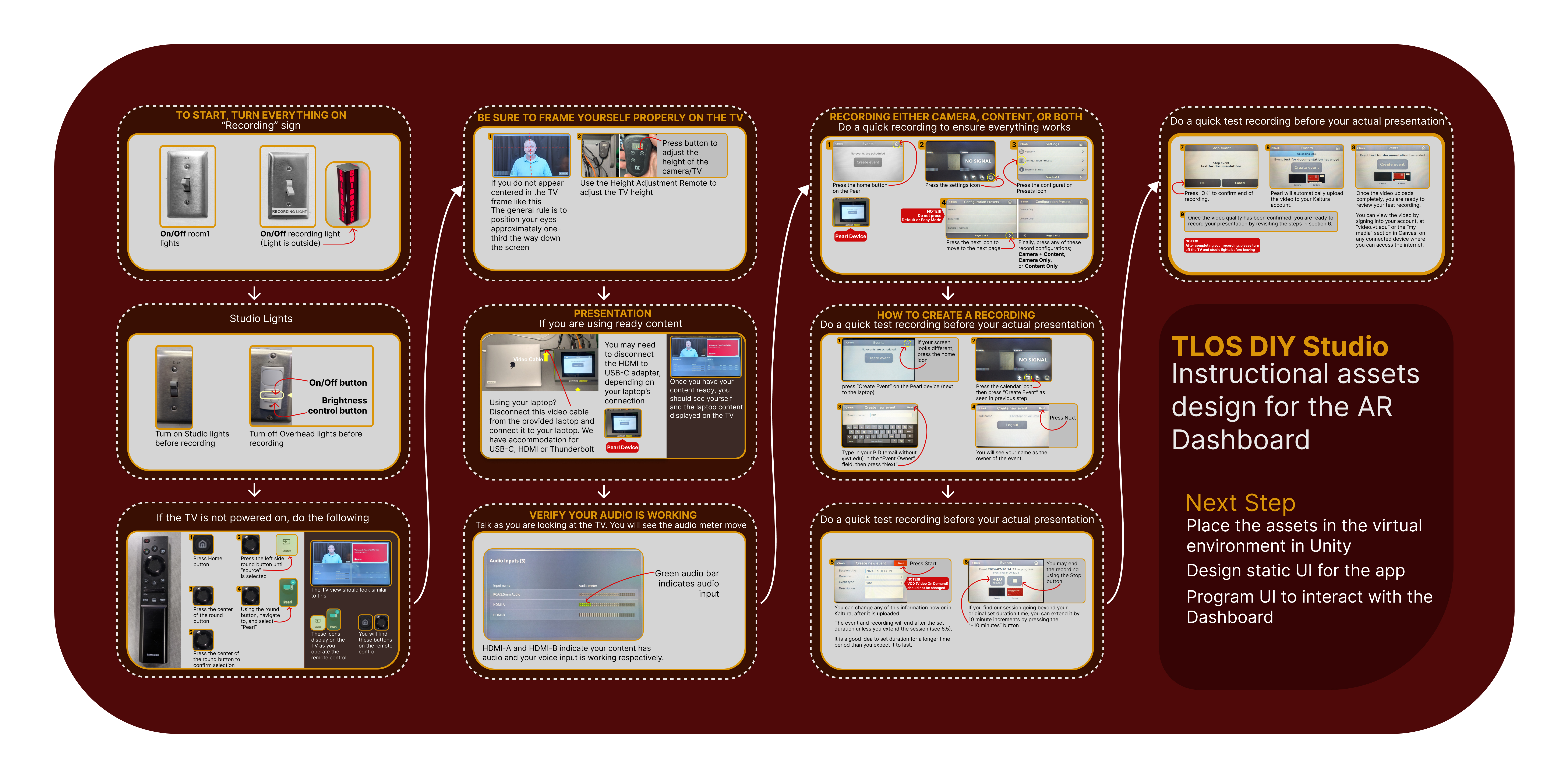

The first step was to get at the underlying issue: the current 14-page Google Doc guide was too big for users who required instant, task-based instruction within the studio. To remedy this, the essential information was extracted from the long document. A one-page visual storyboard was created with Figma and Adobe Illustrator. The storyboard divided complicated procedures into a series of short, bite-sized steps, setting the stage for the app’s instructional content.

Phase 2: Motion Graphics Production

Understanding the strength of visual learning for process procedures, it was decided to convert the storyboard into video form. Using Adobe After Effects, I designed nine separate, short motion graphics videos. Each animation represented one of the key steps included in the storyboard, offering brief visual instructions on how to use particular studio equipment, made short and easy to understand.

Phase 3: Environment Capture & AR Preparation

To deliver a believable AR experience, an accurate digital twin of the recording studio was required. I scanned the geometry and spatial information of the physical studio with the Vuforia Creator App, a LiDAR scanning software. Scan data post-processing was carried out to create a virtual mesh, which I then imported into Unity to be transformed into a Vuforia Area Target. This is an important step as it allows the Unity game engine to recognize the physical environment and understand its structure in real-time during AR sessions.

Phase 4: Core App – 2D Mode

With the AR and content background established, the development moved fully to the Unity engine using the Vuforia SDK. I designed a user interface with control buttons and implemented it to act as the hub of the 2D and AR modes. The nine motion graphics videos and their respective descriptive text passages were brought into the project. I programmed the navigation logic in C#, allowing users to navigate the instructional steps seamlessly using swipe gestures or screen buttons.

Phase 5: Unity Development – Creating AR Mode

Next, we introduced AR functionality after taking feedback from instructional designers and video producers during a testing phase. The Vuforia Area Target I created earlier, based on the scanned studio mesh, was installed in the Unity scene. This enabled the essential coordinate system alignment of the virtual scan with the real studio environment. It also enabled the implementation of the logic for anchoring each of the motion graphics videos to their corresponding physical equipment positions in the AR view. Consequently, when the user points the device at a piece of equipment, the respective tutorial is spatially anchored to it. We developed navigation cues to assist users in locating the correct equipment when off-screen. I utilized an arrow pointer that guides the user toward the target object, which then transforms into a target icon once it has been detected successfully.

Phase 6: Testing and Refining

Testing was done continuously at every stage. This iterative testing included checking for accuracy of the Area Target tracking, smoothness of navigation by the user, readability of all instructions, as well as the stability of AR object anchoring and guidance hints. User testing feedback drove incremental changes to the UI elements, interaction timing, and AR settings, refining the overall user experience at every turn.

The DIY Studio Guide XR app is still in the development phase. Still, it has successfully transformed a dense, text-based manual into an interactive, easy-to-use visual guide. By providing both a handy 2D user interface and a flexible AR mode, it delivers adaptable and very useful guidance. It enables students and faculty to use the recording studio equipment successfully and confidently. The project utilized prominent technologies like the Unity engine, Vuforia SDK with Area Targets and LiDAR scanning, C# for programming, Figma and Adobe Illustrator for design, and Adobe After Effects for motion graphics design.

Interaction and graphics anchoring test

Workflow Storybord Design